What is Machine Learning?

Machine learning is a term that you hear often in the software development industry, and since 2015 that term has become even more popular. So what exactly is this Machine Learning, where did it come from, why is it gaining popularity, and how is it important to software developers?

Let's start by looking at the definition of machine learning.

Machine learning is a subfield of computer science that evolved from the study of pattern recognition and computational learning theory in artificial intelligence. Machine learning explores the study and construction of algorithms that can learn from and make predictions on data.

What this means is that machine learning is a fraction of the huge field of computer sciences known as artificial intelligence (AI). Machine learning focuses on the predictive algorithms that learn from data. Artificial intelligence refers to any computer systems that are able to perform tasks that normally require human intelligence. These tasks include visual perception, speech recognition, decision-making, and translation between languages.

Starting to sound familiar? Well it should, these are all trending technologies that are hitting the news daily. We see them in applications like virtual assistants (Siri, Cortana, Alexa), Google Translate, Skype, and the Microsoft HoloLens. These AI systems are made from multiple technologies that include machine learning.

In what seems like a relatively short period of time this complex technology has become a household presence. But did it happen as overnight as it appears?

When did it start

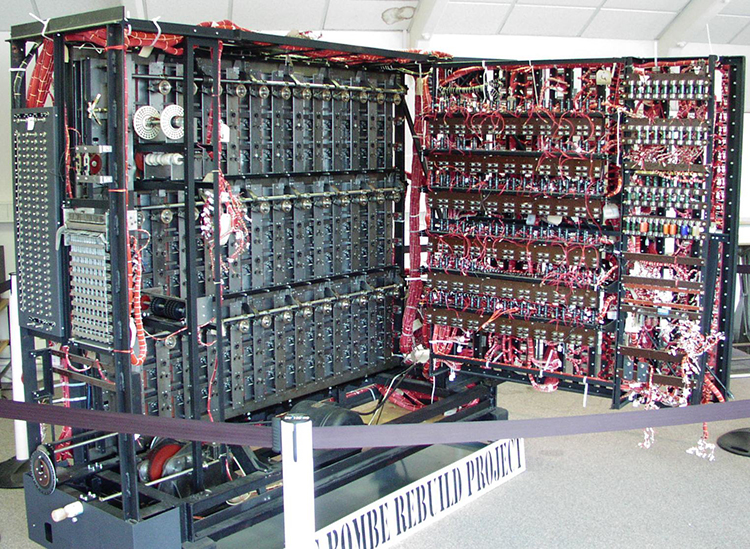

If we take a step back and look at a bigger slice of time, we'll see that the amazing technology we use today was actually conceptualized in the 1950s. Over half a century ago the foundation for AI and machine learning was laid out. The first notable experiment was created by Alan Turing in 1950 - the Turing test. The test was introduced by Turing in his paper "Computing Machinery and Intelligence" while working at the University of Manchester. The Turing test was developed to judge a machine's ability to exhibit intelligent behavior.

A replica of the bombe that Alan Turing helped to create that was able to help decipher messages encoded by the Nazi Enigma machines

Just six years later in 1956, in what many now consider to be the defining moment for the field of AI, The Dartmouth Summer Research Project on Artificial Intelligence began. This summer workshop of mathematicians and scientists was held to explore the idea of using machines to solve problems normally done by humans, this included: natural language processing, neural networks, theory of computation, abstraction and creativity. Many concepts that form the basis of modern AI and Machine Learning systems.

The concepts of machine learning are firmly rooted in this era. However, with limited capacity in computing power, memory, and funding this technology would slowly advance until recently. Today we are seeing a surge in the field, with machine learning concepts making their way into consumer products, manufacturing, and software.

Why now

Machine learning has been waiting half a decade for its shining moment. Storage solutions have evolved exponentially with the cost of storing data dropping from 3.6 million dollars per gigabyte in 1961, to currently less than 3 cents per gigabyte. These massive storage solutions have created the industry of Big Data, and poses the problem of how we analyze these massive data sets. In addition to Big Data, advancements in computing power and memory, the complex algorithms needed to make machine learning possible are a reality.

Big Data and Machine Learning are now primed for opportunity. While computing devices enter consumers hands and collecting zeta bytes of data. Businesses are looking for opportunities to make breakthroughs in the consumer market, manufacturing, and health care. Machine learning has the ability to predict consumer trends, reduce manufacturing costs and heath care costs, and increase the bottom line.

In software, consumers expectations have been raised. In today's software applications, consumers want to interact with software less, while benefiting more. Consumers are accustom to speaking to their virtual assistants and allowing computers to predict their needs.

How does this relate to software development?

As a software developer we need to be able to meet the demands of the consumer. Software is meant to solve complex problems and machine learning provides a new mechanism for doing this at scale. Machine learning has become a necessary tool in software development not just because it is needed, but also because it is expected.

Companies like Microsoft, Amazon, and Google are using machine learning to enhance the users experience. While complex AI systems like virtual assistants usually get the spotlight, there are other smaller scale implementations of machine learning that are useful in our software.

For a good introduction to machine learning concepts and how they can be applied, check out A visual introduction to machine learning

As machine learning and other areas of AI advance so does the tooling. Tooling for machine learning has become an abstraction upon complex mathematical algorithms that open up the technology to a wider audience. Advanced tooling like Microsoft's Azure Machine Learning and Cognitive Service APIs are available to software developers to use with limited to zero knowledge of the inner workings.

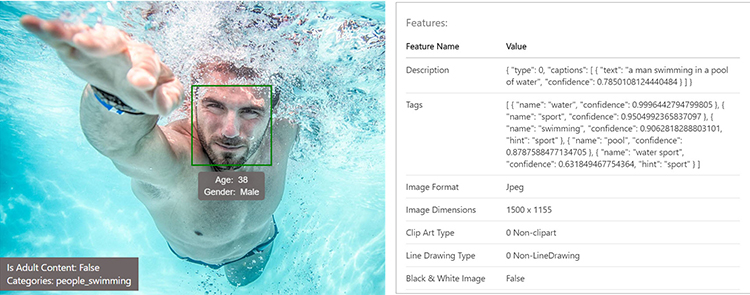

For example, machine learning can be applied to images to enhance an application through computer vision. Computer vision allows developers to let the algorithm automate processes. For instance, we can use machine learning to categorize, describe, and recognize content within images. In an application this may be used to automatically tag images with meta data, detect human emotions and even filter "adult" content uploaded by users.

Text analysis is another machine learning capability that can be used in various application scenarios. Giving your app the ability to to detect a users sentiment can help audit information generated by users. In an application this may be used to review comments for negative reviews, detect cyber bullying, or automatically highlight positive feedback.

Custom solutions based on your businesses data are possible as well. Predicting process delays, machine downtime, or customer behavior could be the next feature to set your business apart from the rest.

Conclusion

Machine learning has come a long way from being a subset of AI concepts created in the 1950s, to a modern method for making predictions based on data. It has become a necessity for consumers, and a new requirement for the expertise of software developers.

Ed Charbeneau

Ed